Overview

A process is an instance of a computer program that is currently being executed by the operating system (a program is static, a process is a dynamic representation). A process consists of the program code and its current state/activity. Some processes consist of multiple threads of execution that can be run concurrently.

The operating system also prevents processes from tampering with each other's resources, and provides reliable and predictable communication methods between processes.

To achieve the roles of an operating system, a data structure must be maintained by the operating system for each process which describes the state and resource usage of that process. This enables the OS to control the process according to its policies and is called the Process Control Block, which is stored in the process table of the operating system.

Process Control Block

The operating system stores information required to manage any given process in a PCB for that process. A PCB is used and modified by most operating system utilities such as memory management, scheduling and performance monitoring. The PCB is stored in the kernel address space in the process table.

A PCB can store wide range of varying information depending on the operating system in use, however there tend to be three main categories that this data can be broken down into:

- Process identification data

- Unique identifiers for the process

- Identifiers of the parent process

- User identifiers

- Processor state data (context)

- Data that defines the state of a process when it is suspended, allowing it to be resumed by the operating system later.

- Includes the content of CPU general-purpose registers, stack and frame pointers.

- Program counter (address of the next instruction to execute)

- Particularly useful when performing a context switch.

- Process control data

- Process scheduling state ("ready", "suspended", "blocked") and scheduling priority.

- Event data that occurred while the process was suspended or the process was waiting for (blocked).

- Process structure information

- Inter-process communication information

- Process security information

- Accounting information (performance data)

Multiprogramming and context switching

The use of these data structures is also essential for a multiprogramming environment where the operating system performs "context switches", giving the illusion of multiple processes being executed simultaneously. A context switch is where the operating system temporarily suspends the execution of a process in favour of another process by moving the context from the registers into the main memory, the other process then has its context loaded into the registers from main memory.

Various things can cause a context switch, they are broadly referred to as "interrupts":

- The process issues a system call (a software interrupt); this could be an I/O Request.

- A hardware interrupt occurs, such as a key being pressed on a keyboard or a timer running out.

However, context switches also occur as a normal part of the operating system's scheduling when a process is moved from the "running" state into the "ready" state, or vice-versa.

Multiprogramming efficiency

Multiprogramming came to fruition when it was observed that in single-user systems (where only one process could run at a time), much of the processor time was spent idle waiting for user input or waiting for access to I/O devices. In this single user system, the time taken to complete a set of processes is the sum of the execution time of each individual process, however the processor usage over this time is only a minor fraction.

However, in a multiprogramming system using context switching the amount of time for a set of processes to finish execution is approximately the time needed for the longest running process to complete and processor utilization is much higher.

Process States

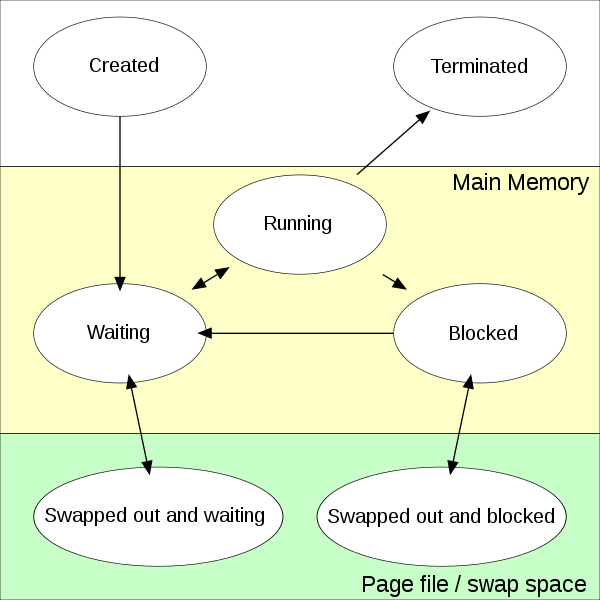

States help the operating system reduce the amount of time the processor is idling.

In its simplest form these states can be represented as "ready" and "executing" where every process is executed in a round-robin fashion (each process is given a certain time to execute and is returned to the back of the queue when its time has run out) and it is assumed every process is always ready for execution. If a process is not ready for execution (i.e. it's awaiting I/O) then this will waste a context switch and reduce throughput of the processor. While this is a valid design, it is wasteful.

To prevent the execution of processes that are awaiting I/O or are in some other form of waiting, we can add an additional state called "Blocked" for these process, and require that they rejoin the ready state before they are executed again. A process can only ever be in one of these three states at a given moment, but they are always occupying the main memory. This is a highly effective design in systems with unlimited memory constraints, as it can maximise the throughput of the system's processor.

However, many systems do not have unlimited memory, and make use of "virtual memory" in which a section of a long-term storage medium (i.e. a hard drive) is emulated as main memory by the operating system (more on virtual memory later). When a process is in virtual memory instead of main memory it is typically referred to as "swapped" or "paged" out. We can thus expand our model with an additional two states for virtual memory enabled systems: "Swapped out and waiting" and "Swapped out and blocked". We can then move into these states should another executing process need additional memory.

The following describes the five state model (and two additional states worth considering).

Created

When the process is created, it is initially in this state. It will remain in this state until the process is approved by a long-term scheduler. This state exists to prevent the ready queue becoming over saturated which could lead to a depletion of processing resources.

A Fork Bomb is an effective denial of service that depletes a system's resources by creating copies of itself, eventually overflowing the ready queue and forcing a system reboot, it cannot function if the process cannot leave the "created" state.

Ready or waiting

In the ready state, the process is loaded into main memory and is awaiting dispatch by the short-term scheduler. When it is chosen by the short-term scheduler for execution, it is moved to the "running" state.

The ready state usually also has a queue associated with it that will be discussed in the scheduling chapter.

Running

The process enters this state when it is being executed by the processor. There will only ever be one program in the running state per processor/core.

When a process has finished execution, it is moved back to the ready state, or alternatively into the blocked state if it has made an I/O request or performed another "blocking" action.

Blocked

A blocked process may not enter the running state. A process can become blocked for various reasons, such as:

- The process is awaiting input

- The process has exhausted the CPU time allocated to it

Terminated

A process can enter the terminated state for various reasons, some of them are:

- Finished execution

- Killed by user

- Program Error

- Operating system intervention; e.g. to resolve a deadlock

- Security Violation

A process is typically removed from memory after entering this state. However, it is possible for a process to become a Zombie (seriously).

Swapped out and waiting

In a system that supports virtual memory a process may be removed from main memory by the mid-term scheduler (if it's low priority), it must renter the waiting state before it can be executed again.

Swapped out and blocked

Similarly, a process that is blocked may be swapped out of main memory. Although not indicated by the diagram, many operating systems allow processes to move directly into the ready state from this point when the process is no longer being blocked.

Threads

A thread is the smallest sequence of instructions that can be managed by an operating system's scheduler. A process always has at least one thread (the primary "thread" of execution). A thread is typically contained within a process, as opposed to being a child that maintains its own Process Control Block. Multiple threads can exist within a single process and threads may share resources (such as memory) with each other, unlike processes. Threads are also much more lightweight than a process, inheriting much of their control data from the containing process. The act of having multiple threads within a single process is called multithreading, and is becoming more and more prominent as processors with multiple cores are becoming pervasive.

As threads are a subset of a process, they tend to use the three-state model of "Running", "Ready" and "Blocked" as they will be swapped out to virtual memory at the same time as their container process.

Multithreading

Multiple threads in a process share resources with each other, but each thread is able to be executed independently of another, this is called concurrency.

On a system with multiple cores, this can dramatically increase the performance of a process, as it is effectively able to do two or more things at once, however this can be a very dangerous concept resulting in undesired behaviours such as race conditions. A race condition is where the input to one thread is dependent on the output from another, if these processes do not occur in the intended order, bad things can happen; for example:

Desired Behaviour

| Thread 1 | Thread 2 | Integer Value | |

|---|---|---|---|

| 0 | |||

| read value | ← | 0 | |

| increase value | 0 | ||

| write back | → | 1 | |

| read value | ← | 1 | |

| increase value | 1 | ||

| write back | → | 2 |

Race Condition Behaviour

| Thread 1 | Thread 2 | Integer Value | |

|---|---|---|---|

| 0 | |||

| read value | ← | 0 | |

| read value | ← | 0 | |

| increase value | 0 | ||

| increase value | 0 | ||

| write back | → | 1 | |

| write back | → | 1 |

In the desired behaviour, the threads effectively run one after the other, negating the benefit of multi-threading, however the race condition behaviour would execute in half the time on a multi-core processor. In most cases we prefer accuracy in our calculations, and this is undesired behaviour. More to the point, multithreading really comes into its own on truly concurrent problems (i.e. those that do not require the input of other threads). Preventing race conditions can also cause deadlocks, so concurrent programming really is a double edged sword. This kind of issue is discussed more in the concurrency section.

It is worth noting that multithreading does not require a multi-core machine, in a single core machine the scheduler will simply perform context switches between threads (a very cheap process for threads) multiple times per unit time. This means that if we have a long running process in one thread, we can still update our user interface in another, as both tasks will be given an appropriate amount of execution time per unit time. Details on how threads are scheduled can be found in the scheduling section.

Types of threading

Kernel-level threading

A kernel-level thread has its scheduling managed by the operating system's scheduler. The kernel exposes an interface to a process for managing its own threads and they can be executed simultaneously by a multi-core processor. This is the simplest possible approach for implementing threads within an operating system.

User-level threading

In a user-level thread, the process only has one kernel-level thread (the primary thread of execution) and the process manages the scheduling of its own user-level threads. This approach cannot take advantage of multiple cores and is not truly concurrent. However, as the threading implementation is contained within the program, it does not need to be implemented at the kernel level and can be used on systems that do not implement threads at the kernel level, it also provides context switches that have effectively no cost at the processor level.

Hybrid threading

The most complex approach as extensive changes are required both within the kernel and the program application code. However this approach can arguably provide context switches with virtually no cost, and allow processes to utilise multi-core processors fully. Microsoft Windows 7 implements this approach of threading.