Computers

A computer, broadly, is any device which can run instructions given to it by an operator. There is an ever expanding list of subclasses of Computers, including Desktops, Smartphones, Mainframes, Laptops and Embedded Devices.

Despite these subclasses, computers all tend to have a common trait: they usually have at least one processing unit, which is typically backed by a block of memory. This allows the computer to perform logical and arithmetic operations based on a sequence of instructions stored within the computer's memory. Virtually all computers choose to supplement the central processing unit with some form of peripheral, to allow the display of output (i.e. a monitor) or to allow additional input to be given (i.e. a keyboard).

Early computers didn't use the transistors we have today, instead they relied on vacuum tubes to perform logical operations. To change the programming of many of these computers: the wiring of the computer had to be changed, so although these early computers were general purpose, they were not easily reprogrammable. This led to the need for a flexible design that allowed the computer to be reprogrammed easily. Which led to the creation of the stored-program/Von Neumann architecture which is discussed a little later on.

Since the advent of the stored-program architecture in 1945, computers have advanced massively with the invention of transistors and many other related technologies, however the stored-program architecture is implemented in virtually every modern computer, making it one of the main defining traits of any general-purpose computer.

Stored-program architecture

The earliest recorded stored-program architecture is the Von Neumann architecture, which was first described in 1945. It consists of a few fundamental components:

- A subdivided processing unit with an arithmetic logic unit and processor registers.

- A control unit containing an instruction register and program counter.

- Memory to store both data and instructions.

- External mass storage.

- The notion of Input/Output.

The architecture has a fundamental flaw, commonly referred to as the "Von Neumann Bottleneck" which arises because the memory holds both instructions and data, but share only a single bus (buses are arrows on the diagrams). A suitable metaphor for this would be a single-track lane where only a single car may pass at a time, forcing others to wait for it: the processor must literally wait for the bus to be clear before it can continue working. This results in a severe performance limitation as the throughput of this bus has not increased at the same rate as processing speed has increased. This problem can be alleviated by the use of cache memory between the processor and the main memory; however no silver bullet solution exists for solving this bottleneck.

While stored program architecture itself doesn't pertain to this section of the course, it is worth bearing in mind that the limitations in hardware can greatly impact the decisions made by operating system designers.

Operating systems

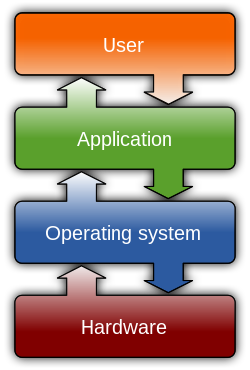

The operating system acts as an interface between the hardware and the user, which is responsible for the management and coordination of activities and the sharing of resources of a computer, which acts as a host for computing applications that run on the machine. Wikipedia - Operating Systems

An operating system is an essential piece of systems software that manages a computer's hardware and resources and provides a set of common services that aid in the execution of computer programs. The operating system provides a layer of abstraction between the hardware of the computer, and the applications running on the computer (which the user interacts with).

The diagram shows a simple hierarchy demonstrating the abstraction between a user of a computer and the underlying systems; to most users the operations of the hardware and the operating system are invisible and they will only ever interact with the applications, the same applies to most software developers. However knowledge of the underlying systems is required to maximise an application's effectiveness.

Examples of operating systems

Linux

Linux is based on the Unix Principle of system design and is perhaps the most widely deployed operating system in the world, with many servers, embedded systems and smartphones opting to use the Linux kernel. The Linux kernel is free and open source, meaning that anyone can contribute to it and expand upon it. The Linux kernel is used in many distributions, such as Google's Android, Ubuntu and Debian.

The Linux Kernel is an example of monolithic kernel. This basically means that it manages all interactions between applications and the hardware, rather than introducing additional levels of abstraction between the two layers.

Microsoft Windows

Windows is the most prominent Operating System in use today, with an estimated market share of around 70%~90% on consumer hardware (desktops and laptops). It is proprietary software designed by Microsoft for use in home computers, servers and smart devices.

Windows uses a hybrid kernel. Which, as the name implies, is a trade-off between monolithic and microkernel design.

Kernels

A kernel contains the functionality necessary to exert control over a computer's hardware devices. Its main role is to take the data given to it by software programs and translate it into instructions to be executed on a piece of hardware. It is a fundamental part of any modern operating system.

When a program invokes the kernel, it is making what is called a "system call". Monolithic kernels are performance oriented and perform system calls within the same address space. Microkernels are more focused on modularity and maintainability, and thus opt to isolate different parts of the kernel in a modular fashion, thus a system call takes place in a different address space to the main body of the kernel.

Many of the roles of an operating system are fulfilled by the kernel.

Monolithic kernels

A Monolithic kernel keeps the entire operating system in the same address space, or effectively as a single process. This singular process provides a high level virtual interface over the computer hardware. It implements all of the roles of an operating system such as process management, concurrency, etc. Modern monolithic kernels allow drivers for individual devices to be loaded at runtime, as opposed to having to be compiled into the kernel.

Advantages of a monolithic kernel include:

- Contiguity of the memory space results in higher performance.

- A single process generally requires less space than a multi-process system, reducing the deployment size.

- As an extension of its reduced complexity and size, it is likely, but not guaranteed, to be more secure.

The already low deployment size, coupled with the ability for parts of a monolithic kernel to be literally thrown away have led to their widespread use in embedded systems, where space constraints are high (some distributions of Linux weigh in at under 5MB in installed size). Monolithic kernels typically use a simple set of hardware abstractions to provide a rich API of system calls to other software,

Monolithic kernels do have a variety of problems, namely related to code maintenance, some of these are:

- Coding the kernel can be difficult due to its relative isolation from the rest of the system, a reboot is required to reload the kernel fully.

- As the kernel is "privileged" it does not perform security checks, therefore a bug in the kernel can have serious side effects on other kernel functionality.

- A security flaw in the kernel could lead to a system wide compromise. The kernel is implicitly trusted.

- Monolithic kernels typically become very large if they are to support all kinds of hardware.

- As modules run in the same address space as the kernel, they can also interact maliciously with kernel data.

- Monolithic kernels have to be recompiled for every architecture they are deployed on.

Microkernels

A microkernel only exposes the "bare minimum" functionality and instead relies on a set of servers communicating via the kernel to expose additional functionality to programs. This approach minimizes the amount of code running in kernel mode. The kernel communicates with the user space using a simple client-server architecture.

Similarly to monolithic kernels they provide a simple set of hardware abstractions that are then used to provide rich systems calls which applications can use, the difference is in the amount of functionality each design exposes. A microkernel requires additional "servers" to provide advanced functionality such as file systems, advanced schedulers and network stacks. Only functionality that absolutely requires privileged access to the system is implemented at the kernel level.

Due to their modular design and simple implementation, microkernels provide a number of advantages over monolithic kernels:

- Code maintenance is easier, as very little functionality will ever need to be changed in the kernel itself due to its simplicity.

- As the kernel is not tied to the operation of the system level "servers", they can be swapped in memory without adverse effects.

- Kernel reboot is not required to test changes because "servers" can be swapped.

- As the "servers" operate in their own non-privileged memory spaces, they are unable to affect the kernel or even each other, thus reducing the impact of bugs and security issues.

- If an individual "server" crashes, it can simply be restarted with only minor impact to the system.

However, microkernels need a fast and reliable messaging mechanism (typically referred to as "Inter-process Communication" or "IPC"), which can be complicated to implement within the kernel and adds an additional overhead to the running of the system, usually reducing the overall efficiency of the kernel. Although the kernel for basic system operation is very small, the overall size of a microkernel OS tends to be larger than its monolithic counterpart due to the addition of many additional "servers" required for operation.

The disadvantages of microkernels can be summarised as follows:

- Larger memory footprint due to additional processes needed for operation.

- Inter-process Communication has a negative impact on the performance of system calls.

- Bugs in the IPC system can be difficult to fix.

- Due to lack of privilege, the complexity of managing processes and various other operating system roles increases.

- In very simple applications where few processes need to be running (i.e. mission critical embedded systems) many of these disadvantages don't exist, they only exist in complicated systems with multiple processes.

Another advantage which stems from the microkernel is the ability for high-level languages to be used in the operating system design. This can greatly simplify the design of individual components within the OS.

Hybrid kernels

Hybrid kernels tend to be microkernels with some additional functionality implemented at the kernel level for performance. They are a compromise between the performance of monolithic kernels and the maintainability and reliability of microkernels. Typically the "non-essential" code implemented into hybrid kernels is deemed performance critical, such as advanced process management and scheduling. Hybrid kernels do not support loading modules into the kernel/privileged mode although they can load "servers" in the user space like microkernels.

Advantages of this approach are:

- Faster development time for drivers and "servers" that run in the user space, as they do not require a system reboot.

- Modules and "servers" can be run "on demand" depending on a system's capabilities, reducing the memory footprint.

- Performance rivals a monolithic kernel

Disadvantages to this approach are fairly minimal, and are namely related to the possibility of bugs allowing privileged access.

Both Microsoft Windows and Apple's OS X rely on a hybrid kernel design.

Roles of the operating system

The primary function of an operating system is to provide an environment in which additional applications can run in, and to act as an interface between the user and the computer hardware. All application software relies on the operating system, regardless of its complexity and each component of the operating system coexists to aid in the overall running of the computer.

All of these roles are covered in more detail within the next few chapters.

Process Management

The operating system's roles in regards to process management are:

- Allocation of resources to processes.

- Enabling the exchange and sharing of data between multiple processes.

- Protecting a processes' resources from other processes.

- Enabling synchronisation between processes.

To achieve these goals, the operating system must maintain a data structure for each process in which the current state of the process is stated. The data structure may also contain information on the resources currently in use or owned by a process.

Scheduling

Scheduling is an operating system task which attempts to ensure that each process gets "fair" access to hardware resources such as processor time or communications bandwidth. Ensuring that each process has "fair" access to the hardware resources of the machine helps increase the quality of service and reduces the load on the system produced by any one program.

A scheduler is used to help with:

- Throughput - The total number of processes that complete their execution per time unit.

- Latency

- Turnaround time - Total time between the start and end of any given process

- Response time - The amount of time from the start of a process to the first usable output.

- Fairness - In which every process is given an appropriate amount of access to the CPU according to its priority.

- Waiting Time - Reducing the amount of time that a given process spends in the "ready queue".

However, not all of these criterion can be met simultaneously, therefore the scheduler usually chooses a compromise, although preference for a particular criterion may be specified (i.e. response time) and will be considered by the scheduler.

Memory Management

The operating system must provide ways in which programs can dynamically allocate portions of memory at their request, and a way in which it can take back unused memory. The operating system must also ensure the security of a processes allocated memory by preventing its use by other processes.

The operating system can also separate the addresses used by a process from the actual physical addresses of the main memory. This is called "Virtual Memory" and effectively increases the amount of RAM available to the system by moving data (paging) that is not in use to external media such as a hard disk and only retrieving it when needed.

Concurrency

Concurrency is the execution of multiple processes simultaneously within the same time unit. Typically it is an extension to the scheduler to allow the operating system to utilise the multiple processing units that modern computers now have.